Follow & Nofollow – At page and link level (true/false).Pagination – rel=“next” and rel=“prev”.Canonical link element & canonical HTTP headers.Meta Refresh – Including target page and time delay.Meta Robots – Index, noindex, follow, nofollow, noarchive, nosnippet, noodp, noydir, etc.H2 – Missing, duplicate, over 70 characters, multiple.H1 – Missing, duplicate, over 70 characters, multiple.Meta Keywords – Mainly for reference, as they are not used by Google, Bing, or Yahoo.Meta Description – Missing, duplicate, over 156 characters, short, pixel width truncation or multiple.Page Titles – Missing, duplicate, over 65 characters, short, pixel width truncation, same as h1, or multiple.Duplicate Pages – Hash value / MD5checksums algorithmic check for exact duplicate pages.URI Issues – Non-ASCII characters, underscores, uppercase characters, parameters, or long URLs.Protocol – Whether the URLs are secure (HTTPS) or insecure (HTTP).External Links – All external links and their status codes.Blocked Resources – View & audit blocked resources in rendering mode.Blocked URLs – View & audit URLs disallowed by the robots.txt protocol.Redirects – Permanent, temporary redirects (3XX responses) & JS redirects.Errors – Client errors such as broken links & server errors (No responses, 4XX, 5XX).A quick summary of some of the data collected in a crawl include: The Screaming Frog is an SEO auditing tool, built by real SEOs with thousands of users worldwide. Render web pages using the integrated Chromium WRS to crawl dynamic, JavaScript rich websites and frameworks, such as Angular, React, and Vue.js.Įvaluate internal linking and URL structure using interactive crawl and directory force-directed diagrams and tree graph site visualizations.

With Screaming Frog you can quickly create XML Sitemaps and Image XML Sitemaps, with advanced configuration over URLs to include last modified, priority, and change frequency.Ĭonnect to the Google Analytics API and fetch user data, such as sessions or bounce rate and conversions, goals, transactions, and revenue for landing pages against the crawl.

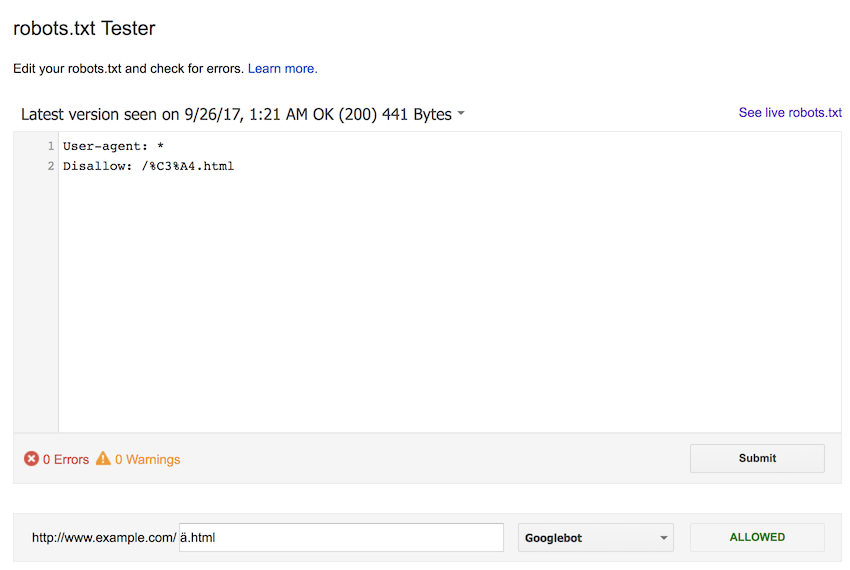

View URLs blocked by robots.txt, meta robots, or X-Robots-Tag directives such as ‘noindex’ or ‘nofollow’, as well as canonicals and rel=“next” and rel=“prev”. This might include social meta tags, additional headings, prices, SKUs, or more! Bulk export the errors and source URLs to fix, or send to a developer.įind temporary and permanent redirects, identify redirect chains and loops, or upload a list of URLs to audit in a site migration.Īnalyze page titles and meta descriptions during a crawl and identify those that are too long, short, missing, or duplicated across your site.ĭiscover exact duplicate URLs with an md5 algorithmic check, partially duplicated elements such as page titles, descriptions, or headings, and find low content pages.Ĭollect any data from the HTML of a web page using CSS Path, XPath, or regex. What can you do with the SEO Spider Software?Ĭrawl a website instantly and find broken links (404s) and server errors. It gathers key onsite data to allow SEOs to make informed decisions. The SEO Spider app is a powerful and flexible site crawler, able to crawl both small and very large websites efficiently while allowing you to analyze the results in real-time.

#Screaming frog seo spider robots.txt license#

Download for free, or purchase a license for additional advanced features. Screaming Frog SEO Spider is a website crawler, that allows you to crawl websites’ URLs and fetch key elements to analyze and audit technical and onsite SEO.

0 kommentar(er)

0 kommentar(er)